Data analysis is a powerful tool for uncovering insights and driving informed decisions. However, the process of analyzing data is fraught with challenges that can hinder accuracy and effectiveness. In this blog, we’ll explore some of the most common data analysis challenges and provide strategies to overcome them.

1. Data Quality Issues

Challenge:

Data quality issues such as missing values, duplicates, and errors can significantly impact the accuracy of analysis.

Solution:

- Data Cleaning: Implement robust data cleaning processes to identify and correct errors, fill missing values, and remove duplicates.

- Validation: Use validation rules to ensure data accuracy during data entry.

- Automation: Automate data cleaning processes with tools like Python’s pandas library or data quality platforms.

Example:

Use Python to clean data:

import pandas as pd

# Load data

data = pd.read_csv('data.csv')

# Remove duplicates

data = data.drop_duplicates()

# Fill missing values

data = data.fillna(method='ffill')

# Save cleaned data

data.to_csv('cleaned_data.csv', index=False)2. Data Integration and Compatibility

Challenge:

Integrating data from multiple sources can be difficult due to differences in formats, structures, and systems.

Solution:

- ETL Processes: Use Extract, Transform, Load (ETL) processes to standardize and integrate data from different sources.

- APIs: Utilize APIs to facilitate data exchange between systems.

- Data Warehousing: Implement data warehousing solutions to centralize data from diverse sources.

Example:

Use an ETL tool like Apache NiFi to automate data integration tasks.

3. Handling Large Datasets

Challenge:

Analyzing large datasets can be resource-intensive and time-consuming.

Solution:

- Sampling: Use data sampling techniques to analyze a representative subset of the data.

- Big Data Technologies: Employ big data technologies like Hadoop and Spark to handle and process large datasets efficiently.

- Cloud Computing: Leverage cloud computing resources to scale data processing capabilities.

Example:

Using Apache Spark for big data processing:

from pyspark.sql import SparkSession

# Initialize Spark session

spark = SparkSession.builder.appName("BigDataAnalysis").getOrCreate()

# Load large dataset

data = spark.read.csv('large_data.csv', header=True, inferSchema=True)

# Perform data analysis

data.groupBy("category").count().show()4. Ensuring Data Privacy and Security

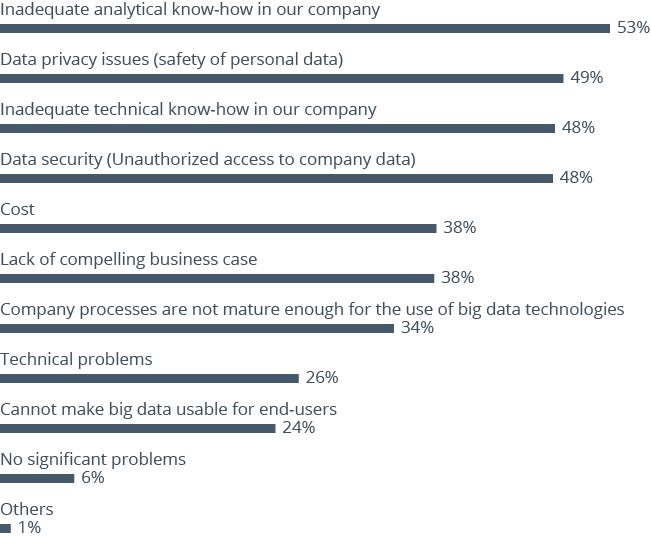

Challenge:

Protecting sensitive data while ensuring compliance with regulations is critical.

Solution:

- Encryption: Encrypt sensitive data both at rest and in transit.

- Access Controls: Implement strict access controls to limit who can view and manipulate data.

- Compliance: Ensure compliance with data privacy regulations like GDPR and CCPA.

Example:

Using Python to encrypt data:

from cryptography.fernet import Fernet

# Generate a key

key = Fernet.generate_key()

cipher_suite = Fernet(key)

# Encrypt data

cipher_text = cipher_suite.encrypt(b"Sensitive Data")

# Decrypt data

plain_text = cipher_suite.decrypt(cipher_text)5. Choosing the Right Tools and Technologies

Challenge:

Selecting the appropriate tools and technologies for data analysis can be overwhelming given the numerous options available.

Solution:

- Needs Assessment: Conduct a needs assessment to identify the specific requirements of your analysis.

- Scalability: Choose tools that can scale with your data and analysis needs.

- Ease of Use: Consider the ease of use and learning curve associated with the tools.

Example:

Compare tools based on specific needs:

- Excel: For simple data analysis and visualization.

- Tableau/Power BI: For interactive dashboards and business intelligence.

- Python/R: For advanced statistical analysis and machine learning.

6. Interpreting and Communicating Results

Challenge:

Interpreting complex data analysis results and communicating them effectively to stakeholders can be challenging.

Solution:

- Data Visualization: Use data visualization techniques to present data in an easy-to-understand format.

- Storytelling: Incorporate storytelling elements to explain the context and significance of the data insights.

- Audience Awareness: Tailor the communication of results to the audience’s level of expertise and interests.

Example:

Creating a simple visualization with Python’s matplotlib:

import matplotlib.pyplot as plt

# Sample data

categories = ['A', 'B', 'C']

values = [10, 20, 30]

# Create bar chart

plt.bar(categories, values)

plt.xlabel('Categories')

plt.ylabel('Values')

plt.title('Sample Data Visualization')

plt.show()7. Keeping Up with Evolving Technologies and Techniques

Challenge:

The field of data analysis is continuously evolving, with new tools, technologies, and techniques emerging regularly.

Solution:

- Continuous Learning: Stay updated with the latest trends and advancements through online courses, workshops, and webinars.

- Community Engagement: Participate in data science and analytics communities to share knowledge and learn from others.

- Experimentation: Regularly experiment with new tools and techniques to stay ahead of the curve.

Example:

Enroll in online courses on platforms like Coursera, edX, or Udacity to keep your skills up-to-date.

Conclusion

Data analysis is a vital function in today’s data-driven world, but it comes with its share of challenges. By addressing issues related to data quality, integration, large datasets, privacy, tool selection, result interpretation, and keeping up with technological advancements, you can enhance the effectiveness of your data analysis efforts. Implementing these strategies will not only improve the accuracy and reliability of your analysis but also empower you to drive meaningful insights and informed decisions for your organization.

Embrace these challenges as opportunities to refine your skills and processes, and you’ll be well on your way to mastering the art of data analysis.